Let’s face it, detecting malicious activity can be a challenge. Immense log volume, analyst alert fatigue and ever-changing business processes are just some of the common problems encountered. At Cedar, building effective detections is especially important since we deal with PHI regularly; however, the process of developing detections can be just as difficult as defining which detections to build. This is especially true when development tooling doesn’t match production tooling. Leveraging Panther, our Security Incident and Response tool (SIEM), Cedar has formulated a development workflow to help solve these problems allowing us to test, deploy, and monitor stateful, real-time detections.

Through our new development workflow, we have been able to expand the types of activity we can identify in real time, including threshold-based alerts, session tracking, detection chains, and simply broader alert logic. By working with the right tools, we can get all of the alerts for these detections, without having to wait for a query to run on a limiting predefined schedule.

Cedar’s Security POV

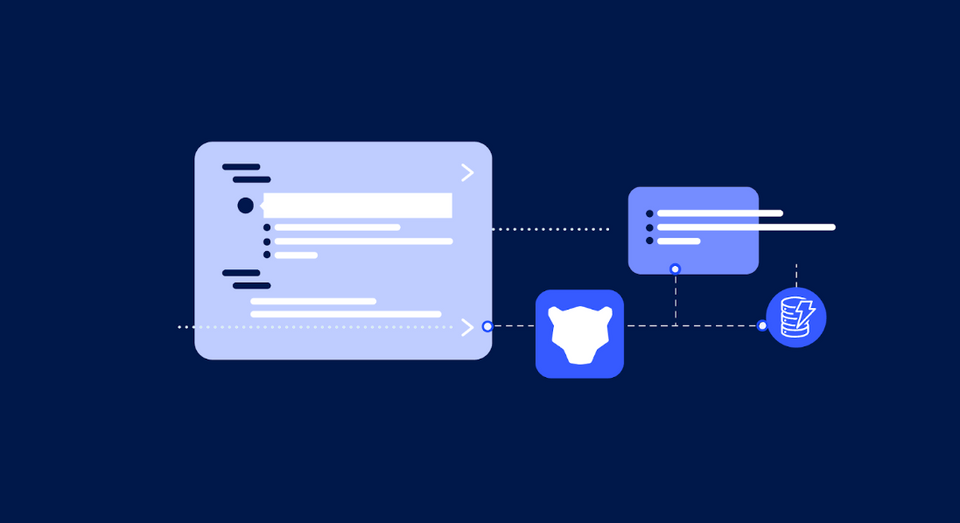

Panther, a cloud-native SIEM tool, has been a close partner of Cedar for years because they help keep our security program “simple and scalable to stay secure” by defining detections as code. While many modern security teams are learning proprietary query languages and navigating ever-changing tool interfaces, we are writing our detections in python and tracking changes to those detections, runbooks, and automation with the panther_analysis_tool and Github Actions.

Panther’s detection functions run on every individual event across all configured log sources. This is fundamentally different from many SIEM tools that rely primarily on regularly scheduled queries. We like this approach because it enables writing detections-as-code in Python, which run on every single log, rather than writing query based detections. This format allows alerts to be delivered in real time. Panther also ships with caching helper functions that store key-value pairs to an AWS DynamoDB table for use across detection runs.

Caching is the Key

- Caching allows for event chaining between detection runs. Detections can wait for a specific status of the cache to trigger, or an upstream detection can influence the logic or severity of another. For example, an alert for manual activity from an AWS Admin in a production account might be marked as a “High” alert; however, if a separate detection for potential access enumeration event fired recently in the same user’s account, the severity could be elevated to critical.

- Related events can automatically trigger follow-up alerts based on indicators like IP-Address, username, and hostname when upstream detections store those indicators in the table.

Although the caching feature is incredibly useful, we discovered some limitations in Panther’s native implementation that slowed down detection engineering. Those were:

- Testing detections from the UI directly altered the production DynamoDB table

- Mocking the caching functions was tedious and required us to modify our detections to handle the unique output.

- Detections that were based on high thresholds required tests to be run repeatedly until the threshold was met.

We still wanted to expand our use of caching, so we needed to find or build our own solution to meet our specific needs. Our requirements for an effective caching solution for local (i.e. on a laptop) engineer development, were:

- Avoids interacting with the production DynamoDB table

- Supports loading “Historical” or pre-configured test data for any detections using caching

- Allows testing via a Github Action as part of our CI/CD process

- Minimally impacts detection code (we don’t want to alter detection logic just to test)

- Shouldn’t be too complex (we don’t want more to maintain)

Cedar’s Solution: Local Caching

After brainstorming and researching we settled on an implementation leveraging AWS’s local DynamoDB. Ideally, using a DynamoDB instance would put the developer fully in control of the cache and allow unrestricted experimentation and visibility in a similar environment using the same services and tools as the production cache, but safely.

Amazon maintains excellent documentation for their local DynamoDB Docker image, so the basic implementation was quite simple*. Panther was kind enough to provide their basic schema for their production cache table in DynamoDB, and that was all we needed to get started. For more details on this bit, see Amazon’s documentation and our docker compose file for spinning up a local in-memory DynamoDB instance.

*It’s worth mentioning that everything we built locally was able to be replicated in our CI/CD pipeline (using GitHub Actions). For an example, see our GitHub repo.

Onto the fun part! While implementing the local cache, four specific challenges needed to be overcome:

1 - Native integration with Panther’s CLI tools

Panther’s panther_analysis_tool is used for all panther testing and detection management. Luckily, the code for interacting with Panther’s production DynamoDB table is available and part of Panther’s standard helper library on Github. Adding a simple check for a local variable here allows us to switch between the local instance or the production instance. Testing this setup showed that no changes to the existing detection code were necessary to use the local cache:

if os.environ.get("LOCAL_DYNAMO_URL") != None:

endpoint = "http://localhost:8000”

2 - AWS Credential Management

Local DynamoDB requires AWS credentials to function correctly. The credentials don’t need to be valid, but they must be configured in the same way as real AWS credentials in the AWS CLI**. Setting up credentials in a complex cloud development environment (where every developer has a different setup) can also be tricky. To solve these issues, we back up the existing AWS credentials file, if it exists, and create a new file with a dummy default entry that we know works. When the cache is stopped, the original credentials are restored. Simple!

**Two quick notes:

- The local DynamoDB service is looking for default credentials in ~/.aws/credentials. If this is overwritten after starting the cache, by real credentials, the cache will still function, but old credentials will be restored when the cache is turned off.

- AWS SSO uses a different directory, and will not interfere in any way with this process.

3 - Abstracting the Interface

At this point, the local cache works with the Panther Analysis Tool (yay!); however, using Amazon’s native REST API for DynamoDB can be quite cumbersome with long cli commands and limited operations. This prompted us to write a simple command line interface for interacting with the cache. The CLI simply uses pipenv to create aliases for common commands. Some examples include fun bash scripts for added functionality such as a pretty dump of keys and values (thank you jq!), key/value manipulation, and more (see all of the available commands in our public GitHub repo!).

4 - Pre-populating the Cache for Testing

You may ask: Why would I ever want to pre-populate the cache? Mocking is Panther’s currently recommended solution for testing, but, as mentioned earlier, it falls short for us in two ways:

- The caching helper functions can return a count, stringSet, or dictionary, but mocking doesn’t currently support those types and must return a string. This requires detections to handle the test case with specific logic that doesn’t match the production use case.

- Mocks would have to be run repeatedly to achieve the desired DynamoDB state, which is not economical and takes too much time for high threshold detections. Instead, we want to push a button to load all values, including the event count that would trigger the threshold.

With Cedar’s local caching implementation, detections that use caching can store test data as the first test in the detection’s schema file. The test will always return “False,” preventing any errors from actual detection code. By ensuring that this fake test always appears first in the testing order, we can anticipate where to look for data and use that to dynamically load DynamoDB objects tied to specific detections. Running the local cache “populate-tests” command will find these objects and automatically load them into the cache (thank you yq!).

Wrapping up

We have been able to prioritize detections-as-code by partnering with Panther as we build a modern Security program, and having a simple local DynamoDB table, accessible by all detections, truly opens the door to possibilities. Caching allows us to maintain state in a way that’s optimized for our environment, build dynamic enrichment tables, link together disparate data between rule runs, and even build complex rule logic that pulls from a dozen different log sources and detections. Put simply, developing detections can be hard, but the right technical tools and partners have helped us explore new ways to provide context and filtering in our detection & response program.

Resources

To learn more about the Cedar Security program, please check out some of our work on GitHub.

To learn more about opportunities at Cedar, check out Cedar’s careers page!

TJ Smith is a Technical Lead on Cedar’s Security Operations Team. To learn more about TJ, connect with him on LinkedIn here.

Jon Massari is a security analyst on the Security Operations Team at Cedar. Connect with him on LinkedIn here.